Pandas provides several highly effective way to select rows from a DataFrame that match a given condition from column values within the DataFrame. Similar to SQL’s SELECT statement conditionals, there are many common aspects to their functionality and the approach.

To perform selections on data you need a DataFrame to filter on. In this tutorial we use an open source data file from FSU with some information on housing to perform our analysis. In the section below we quickly import pandas and read in the .csv file into a DataFrame.

Once we have the DataFrame, you can get yourself quickly familiar with the data using DataFrame.head() (or df.head()) or DataFrame.describe(). By viewing the data you’ll see it’s all mainly integer and float values in the DataFrame.

Select Rows On a Single Column Condition

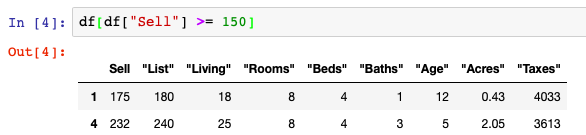

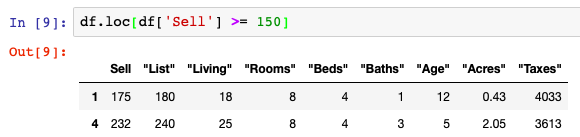

The first approach to filtering the DataFrame on a condition that we use is one of the more common approach. We filter based on some specific operator to get some result similar to a query. The operation below filters the Sell column where the value is greater than or equal to 150.

This this type of conditional can be used on string, integer, and other data types within Pandas such as ==, <, >, etc. as well as other functions that have True & False values returned in the Series, such as str.contains().

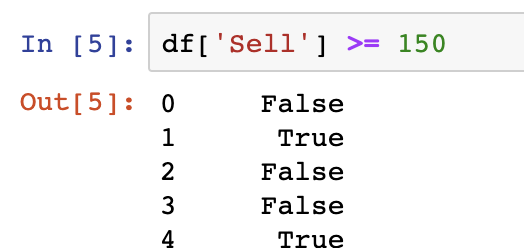

In the example above, what we are actually doing is returning a list of True & False values back into the DataFrame to provide the mechanism that allows data selection. Within the main df[] what we return is actually something like the below:

Select Rows On a Multiple Column Condition

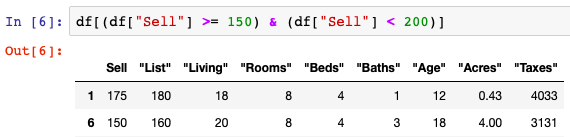

Now that the fundamentals of data selection are understood, we can move on to selecting based on multiple criteria within a DataFrame. This is achieved by passing in data in multiple Series objects within a list based on some operator (&, or, etc.) Our example below filters on data where the Sell value is greater than or equal to 150 and also lower than 200. You can then select rows from a Pandas DataFrame based on these criteria as well as adding in other conditions in a similar pattern.

Pandas actually returns as single Series of True False values to the DataFrame for the condition to be applied.

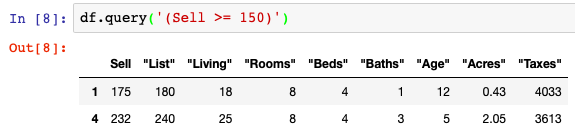

Another option is the use of the DataFrame.query() function on the DataFrame object. The syntax here is interesting as the query needs to be written in a string format for the conditional to work.

DataFrame.loc can also be used to select data using a similar format as our first example. Here we pass the same Series of True and False values into the DataFrame.loc function to get the same result.

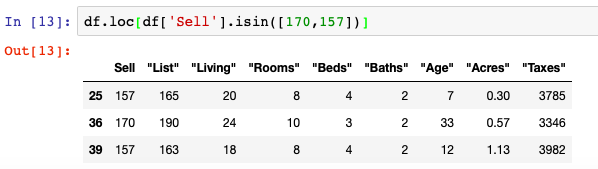

Use .loc to Select Rows

For conditionals that may involve multiple criteria similar to an IN statement in SQL, we have the .isin() function that can be applied to the DataFrame.loc object.

Filter Out Rows Using Regex

One topic not covered in the Jupyter Notebook structure we have here is the use of filtering logic for string values as our dataset doesn’t contain string values. We can however point to the easiest technique in Pandas for filtering this way which is the use of the .str.contains(). We can use Regex filtering on strings in a Series by inserting the Regex logic into the contains() function. An example would be DataFrame.Series.str.contains(‘^f’), which would find any value starting with f in the given Series.

For more on how to use Indexing and Selecting data from the officials Pandas documentation, please see the following link. More information on common Pandas operations can be found in our detailed tutorials and on our GitHub.